1 Virtual Health Sciences of the Navarrese Department of Health, Navarra, Spain

2 Virtual Health Sciences Library of the Balearic Islands (Bibliosalut), Palma de Mallorca, Spain

3 Andalusian eHealth Library. Ministry of Health of the Regional Government of Andalucia, Sevilla, Spain

4 Health Sciences Library of Catalonia. Ministry of Health of the Regional Government of Catalonia, Barcelona, Spain

5 Rebisalud, Spain

Corresponding author: Idoia Gaminde, igamindi@navarra.es

Abstract

This is a cooperation project whereby the group of eHealth library members of Rebisalud (eHealth Libraries Network – http://www.rebisalud.org) have developed a core set of quality indicators to measure and evaluate the services provided by the newly implemented eHealth libraries in Spain. This core set will help us to understand objectively the functioning of the different services provided by the libraries, as well as to facilitate the comparison of our libraries to learn from each other in order to improve our services.

Methods: The norm ISO 11620 (Library performance indicators) was revised. First, we developed a classification scale to screen the indicators focusing on virtual libraries; three independent reviewers rated each indicator with that scale. Second, they calculated independently to assess their feasibility. Third, a core of 17 indicators was selected. Finally a consensus was reached among the leaders of the eHealth libraries members of Rebisalud.

Results: With the classification scale only 20% of ISO’s indicators were selected, with most indicators related to non-virtuality like physical facilities being excluded. We found important problems with definitions or concepts, as well as problems with the terms used among our libraries.

As a result of the whole process 20 indicators were defined. They are classified in terms of: structure (human and economic resources, electronic collection), process (use of resources, access) and results (efficiency, user satisfaction).

Each indicator is described by name, code, definition, aim, method, interpretation, and information source.

Conclusions: The cooperation has been very productive, and will allow us to make a continuous exercise of benchmarking among the eHealth libraries of Rebisalud. However, more work has to be done because we still need to construct a user satisfaction questionnaire.

Keywords: Libraries, Digital; Libraries, Medical; Library Administration; Library Surveys

Introduction

8 years ago, the Spanish’ Autonomous Communities eHealth Libraries agreed to collaborate in different projects. This was the origin of a network REBISALUD (Red de Bibliotecas de Salud (Rebisalud) eHealth Libraries Network – http://www.rebisalud.org). Three main areas of collaborative work were set up: quality, buying club consortia and web area.

In the Quality group the aims are: To develop a core set of quality indicator for all the Health Virtual Libraries in Spain; to facilitate the task of measuring objectively the performance of the Health Libraries; to set up indicators to back up the actions of the Virtual Libraries; to facilitate our visibility and, finally, to be able to compare our e-health libraries in Spain with the equivalent libraries in the rest of the world.

Here we present the first step of the project: the development of a core set of quality indicators to measure and evaluate the services provided by the newly implemented eHealth libraries in Spain.

Methods

The norm ISO 11620-2014 (an international standard with performance indicators for libraries)1 was revised. First, we developed a classification scale divided into four categories (resources, access and infrastructure, use, efficiency, and potentials and development) (table 1) to screen the indicators focusing on virtual libraries; three independent reviewers from three different virtual libraries rated each indicator with that scale. Second, they calculated independently each indicator selected to assess their feasibility. Third, a core of 17 indicators was selected. Finally, a consensus was reached among the leaders of the eHealth libraries members of Rebisalud.

Results

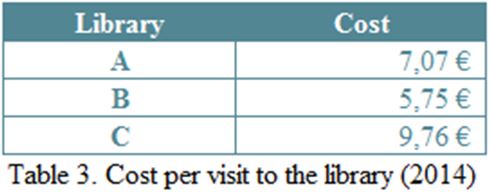

With the classification scale to screen ISO’s performance indicators (see table 2), 21% (11/52) were classified as A –easy to calculate-; 11% (6/52) as B –difficult to calculate but if interesting worth recommending-; 2% (1/52) as C –problems with the definition-; and 34% (18/52) not applicable to Virtual Libraries. There was not agreement for 30% (16/52), (see for example B.2.1. in table 2), this is due to the viability of calculating costs as defined by the norm. Or the case of indicators related to users satisfaction that implies the need of the network to construct a questionnaire to be shared by all of us.

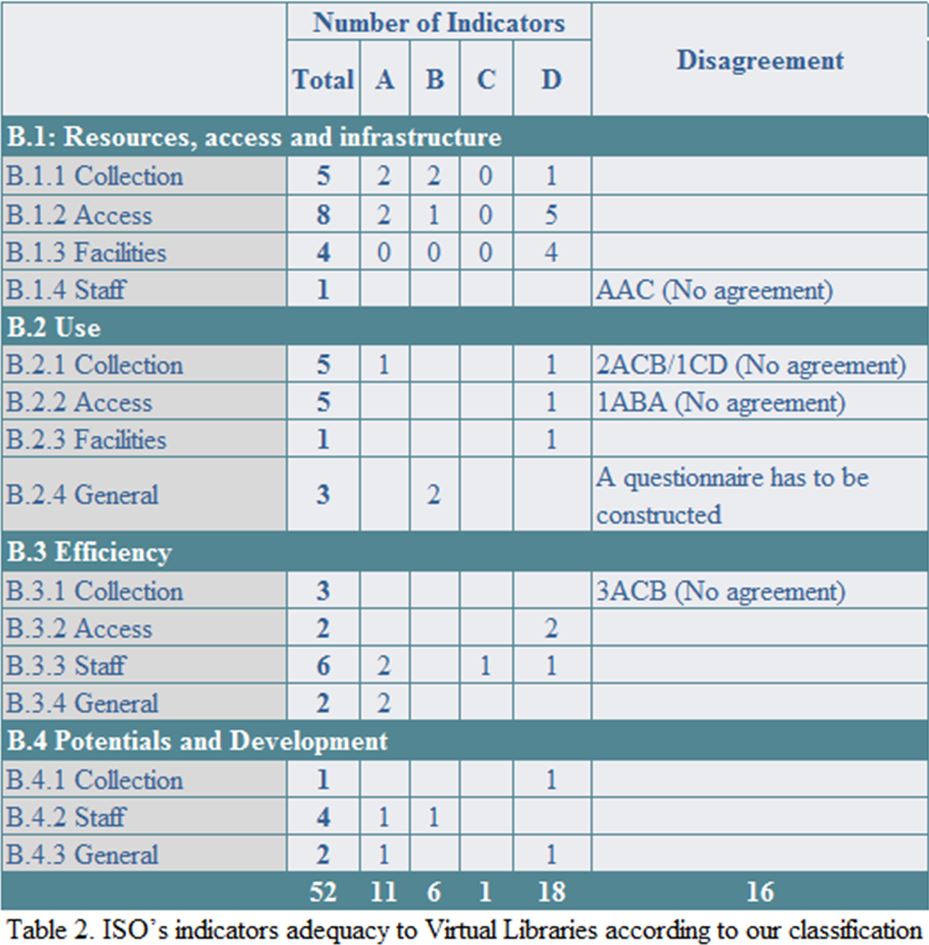

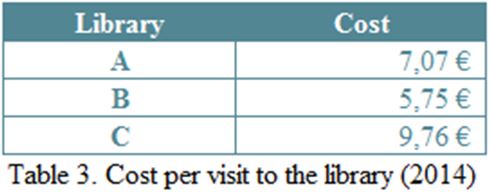

As a result of this first stage 17 indicators were defined, but in a second review 3 new own indicators more about document supply service were added. They are classified in terms of: structure (human and economic resources, electronic collection), process (use of resources, access) and results (efficiency, user satisfaction). Once the core set of indicators was selected, we took a step forward and agreed to calculate the indicators to test its feasibility, as an exercise of benchmarking. This allowed us to learn the short of problems that have to be faced when comparing different libraries. An example of our benchmarking exercise can be seen in table 3. Here we are comparing the costs per visit to the library.

The debate leaded us to redefine some of definition of numerators and denominators of the indicators selected, i.e. costs, visits to the library. In the former we had to agree on what sort of costs were going to be considered and whether we were able to conform to it. And in the latter, we realized that number of visits to the library webpage probably were not a good answer to library visits in physical terms.

The calculation of these 17 indicators prompted us into a very interesting debate on the meaning of each indicator, i.e. what was behind each figure. For example, we realized that some libraries have and open and free document delivery service while others don’t, therefore the policy of each library will affect the interpretation of the indicator, rather that the calculation itself.

Each indicator is described by name, code, definition, aim, method, interpretation, and information source.

Most of the indicators rejected were related to non-virtuality, i.e. like physical facilities, shelving, and internet access. We found important problems with definitions or concepts, as well as problems with the terms used among our libraries.

We know that this is just a tiny step towards quality measurement, but our learning during the process has been huge, and we hope to be able to take a step forward with the first benchmarking exercise for our network (Rebisalud).

Conclusions

The cooperation has been very productive, and will allow us to make a continuous exercise of benchmarking among the eHealth libraries of Rebisalud. However, more work has to be done because we still need to construct a user satisfaction questionnaire.

REFERENCES

1. ISO 11620:2014. Information and documentation – Library performance indicators.