F1 – Research performance assessment in Europe, an analysis of involved professionals

Giuse Ardita1, Massimiliano Carloni2

1Istituto Superiore di Sanità – Library, Rome, Italy

2Thomson Reuters – IP & S, Rome, Italy

Corresponding author: Giuse Ardita, giuse.ardita@iss.it

Abstract

Introduction. Research evaluation is having a stronger and stronger influence on scientist career advancement and funds allocation, and peer review is still, by definition, the main qualitative evaluation method. Nevertheless, since the beginning of the 20th century (Gross and Gross, 1927) and particularly in the middle of the century (Garfield, 1955), several scientists started to design quantitative tools for evaluating journals papers, authors institutions etc., with the purpose to help overcoming human judgment limits.

Objective. This study is aimed to provide an analytic overview of studies published in Europe and concerning what should be a specialized section of Library and Information Science (LIS), focusing on quantitative research evaluation and bibliometrics. Our intent was to analyze the provenience of a statistically significant subset of the continuously increasing studies on quantitative evaluation parameters, to find out which research areas are more involved in this context, which countries and authors are more productive and, obviously, to provide an assessment of their impact based on citation figures.

Methods. The analysis has been conducted on the complete settings of Web of ScienceTM Core Collection (WoS-CC), considering a time frame of forty years from 1975 to 2015, since we may consider 1975 as the first year in which Garfield actually utilized IF algorithm for Journal Citation Reports®(JCR) production. A search concerning related keywords (bibliometrics, scientometrics, “research performance evaluation”, etc.) in Topic search field was performed. Then the results set was filtered by article, reviews, proceeding papers and books type of documents. It was further refined taking into consideration only European authors (i.e. affiliated to European institutions), with a deeper focus on the Italian scenario.

Results. A detailed analysis from various perspectives, pointed out that authors have quite different scientific backgrounds, most prolific authors do not necessarily belong to the most prolific countries, Top Cited authors work in good percentage in scientific subject research areas, often far from LIS which, anyway, accounts for a high percentage of the total number of publications.

Conclusions. Our analysis highlighted research performance evaluation, as a subject of great and increasing interest also among scientists employed in non-LIS areas, so it may be considered a topic running transversally in all scientific disciplines. In our opinion, a joint commitment of LIS and non-LIS professionals is the best way to go.

Keywords: Information Management; Research evaluation; Bibliometrics; Evaluation Parameters, Europe; Impact

Introduction

Since the time Eugene Garfield(1) described the central role of citation as the fundamental linkage between ideas, papers and authors, a large number of studies have been performed in this area of interest. Also many terms have been coined to define each specific area of study(2), and a great number of studies have been carried out in order to define meaning, value and limits of all bibliometric tools and techniques. Many researchers in the last decade also have developed new tools (h index, g-index etc.)(3)(4) to make quantitative evaluation increasingly closer to qualitative assessment of research.

Due to the application of quantitative measurement for promotions and funds allocation, no researcher in the international scientific landscape seems to be immune and untroubled by the never ending debate on “quantitative research evaluation”.

For quite a few years, citation analysis and bibliometric techniques have become a very important search field, not mainly, as Garfield pointed out in his paper,(1) to evidence conceptual relationships between papers and authors, but for providing evaluation services for research.

Objective

The starting point of our study was a seemingly trivial observation of the fact that, with the exception of North America and maybe The UK, the bibliometrician as a profession has not yet been clearly codified. Papers centered on bibliometric issues seem to be authored by professionals across all research areas, and more often than not are published in journals not belonging to Library and Information Science (LIS) area. Moreover many authors perform quantitative studies on the literature belonging to their professional area (medicine, surgery etc.) by means of self-taught expertise(5).

In order to determine the statistical trends of literature concerning bibliometric issues, and more in general of quantitative research evaluation in Europe, we decided to conduct a statistical analysis of published studies in this specific area, in order to detect what kind of professionals are involved and engaged in what we define with the broad term of “quantitative research evaluation”.

Other recent studies have been performed on worldwide contributors to the literature of library and information science(6) in general, and for what concerns Europe, a similar but more general study(7) has been performed in Scopus, on journals listed under: ”Library and information Science” subject category. Our study is instead intended for a more specific focus.

Methods

Our analysis was performed on Web of Science Core CollectionTM (WoS-CC). We decided to consider a time period of forty years: from the beginning of 1975, the year in which the first bibliometric platform in the world, Journal Citation Reports® (JCR) (8), begun to be published, to the end of 2015.

In order to retrieve our dataset, we examined a large numbers of articles concerning research evaluation and selected the most used author’s keywords and “keywords plus”(metadata extracted in WoS-CC from the references titles). On these we built our search strategy: the selected terms were combined with OR in Topic Search field. The results where refined per Document types: ARTICLE and REVIEW (which are counted in JCR). PROCEEDINGS PAPER and BOOK CHAPTER have also been added, in order to include documents belonging to socio-humanistic areas too.

The dataset was further restricted to European Countries (Table 1). We obtained a bulk of 7180 titles (our last search was performed in March 2016, in order to be sure that a great majority of items published in 2015 might be retrieved).

We definitely relied on our approach and methodology: an investigation based on data from all the articles matching our queries, published potentially in all journals, rather than on the contributions by a particular set of authors or set of journals, as done previously in many similar studies. Our approach allowed for the inclusion of different specializations and expertise, in a wide range of disciplines.

On the final dataset, we carried out analyses from different perspectives, using the analysis instruments available on WOS-CC and InCites.

Data Analysis

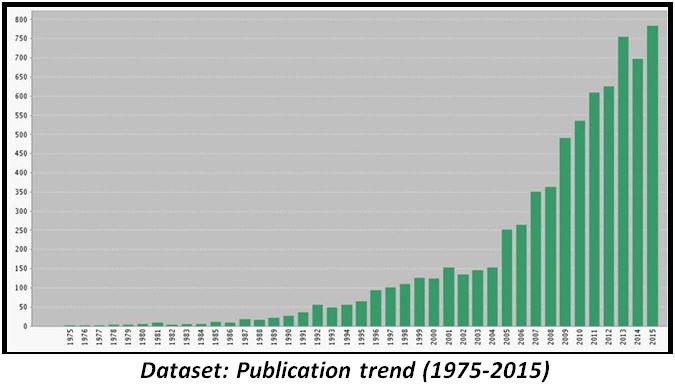

About 75% of the dataset consisted of research articles, and half of such articles have been published within the last five years of the period under consideration (Table 2). This fact in itself is the first evidence of the increasing international interest on the issue.

Production/Countries

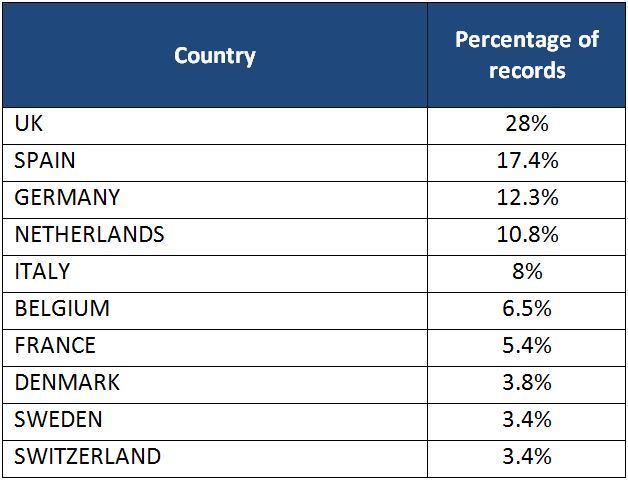

We considered at first the raw production data of each country and at the top of the ranking (Table 3) stands The UK which claims almost 25% of the whole European production, second in ranking is Spain and third Germany, then The Netherlands and Italy. This data is absolutely aligned with other studies(7), even if covering a larger and more updated time range. Ranking, apart from some slight differences in the percentages, is the same when examining the time period of 2006-2015.

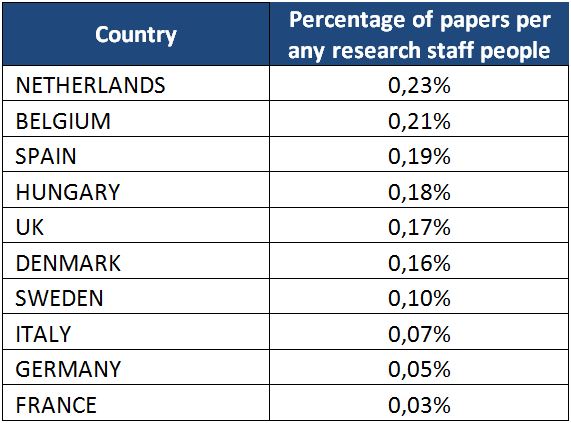

If we analyze the same data, in comparison with the number of researchers per country (data taken from EUROSTAT website) (Table 4), instead the ranking is completely different. We found out The Netherlands at the top of the ranking, followed by Belgium and Spain.

In terms of Impact and looking at the last 10 years, citation impact analysis showed The Netherlands at the top of the countries with an average of almost 18 citations for each paper, and when we considered a more appropriate contextualized indicator (the CNCI, Category Normalized Citation Impact), The Netherlands first position was again confirmed.

The analysis concerning top Institutions is obviously quite correlated to the country analysis and showed at the top positions, the Spanish Consejo Superior de Investigaciones Cientificas (CSIC) followed by the University of London and the Leiden University.

The analysis concerning top Institutions is obviously quite correlated to the country analysis and showed at the top positions, respectively the Spanish Consejo Superior de Investigaciones Cientificas (CSIC) followed by the University of London and the Leiden University.

As already envisaged by Olmeda-Gomez et al.(7) ”The highest impact ratings were attained by European institutions whose members are prolific authors of papers on informetrics”.

Journals/publications

We retrieved 1874 journal titles among which 87 journals belong to WoS Information Science and Library Sciences area. The variety of journals included in our dataset evidences the great cross-disciplinary interest of quantitative research evaluation issues. Among the top 25 journal titles for number of published documents, we detected, together with LIS and Computer Sciences journals, titles of journals belonging to medical and clinical areas. In the whole set we noted journals of economics, psychology, chemistry and of any medical field such as neurology, oncology, nursing etc.

We analyzed our main dataset by categories (251 in WoS-CC) and we found out almost 40% of the items belonging to “Information and Library Science”, almost 30% to disciplines closely related to Computer science and the remaining 30% to other disciplines, many of which belonging to medicine and to economic areas.

In order to better study the distribution of publications included in our main dataset, we further allocated WoS Research areas present in our dataset in five macro-areas we called: Information and Library Science; Computer Science and Engineering; Biomedicine; Business Economics & Social Science; Science–Multidisciplinary. The results in percentage showed that 43% of publications do not belong to LIS and adjacent areas (Computer Science and Engineering). This is a very significant finding: bibliometrics, even if is codified as a scientific discipline in itself, is quite often used as a “tool” in many scientific studies of different areas, showing itself to be quite transverse and multidisciplinary, with noticeable presence also as a “topic” in journals belonging to very far from areas of subject..

Results

In order to analyze author’s types and peculiarities, we split our main dataset in LIS (Information and Library Science and Computer Science) and NOT-LIS (all other areas).

Authors LIS

Authors that have been publishing in LIS areas account for around 44% of our dataset (Table 5).

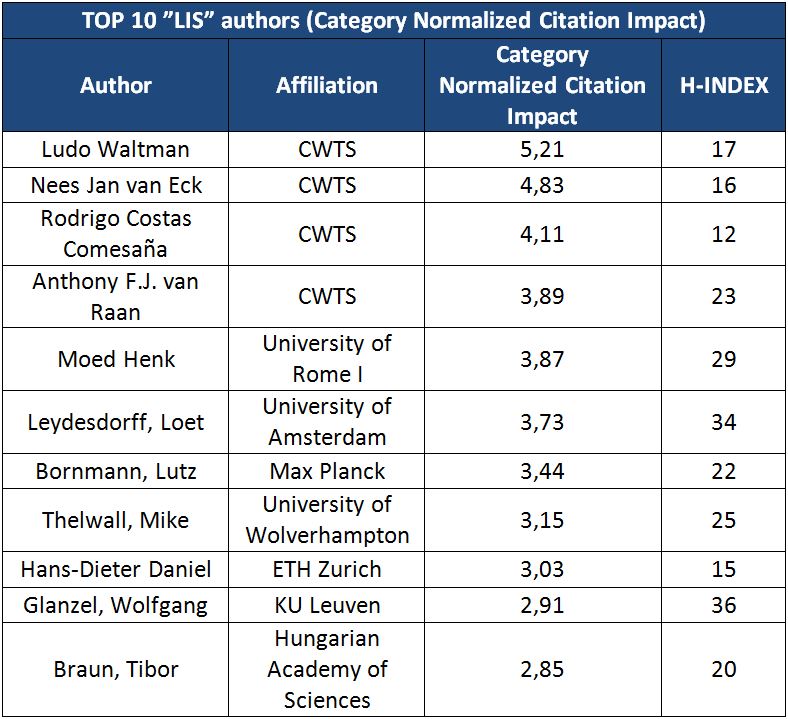

All 25 names are experienced bibliometricians and their research is devoted almost completely on quantitative evaluation issues. As for their affiliation, these top 25 authors belong, for the measure of 40% to The Netherlands and Belgium, a percentage not to be considered extraordinary due to the importance of some worldwide well-known bibliometrics workgroup (in Leiden, Leuven, Antwerp, etc..)

As for the area of specialization, in TOP 25 LIS Authors list, predominate backgrounds based on Natural Sciences (Mathematics, Chemistry, Physics),.

One possible explanation could obviously reside in high-demand of statistical mathematical specialization requested at a certain level, and the lack of familiarity with these techniques as far as medical researchers.

Also is important the consistent presence of authors with a background into Humanities (Philosophy), or in some way related to the area of Social Sciences: a possible explanation, in this last case, it is certainly detectable in the historic “affiliation” of library science disciplines with the area of Social Sciences.

This key fact was already noted and referred by Walters et al.(6). ”Faculty in the natural sciences and LIS are more likely to be found among the top 50 authors than their overall contributions would suggest”.

In terms of Category Normalized Citation Impact, a valuable indicator that compares papers with peer-papers (papers in the world published in the same year, in the same area and with the same document type), keeping in consideration only the documents in our dataset, CWTS team members are largely at the top of the ranking. (Tab. 6)

This would not be so evident taking into consideration the individual H-Index for the same subset of documents. It’s worthy to be mentioned, that we considered the full (whole) counting with respect to the authorships and this could have had a distorting effect.

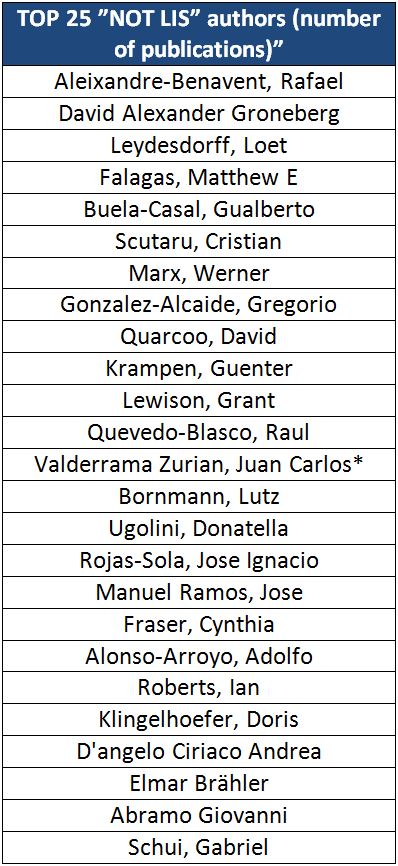

Authors NOT-LIS

Authors of NOT-LIS areas published more or less 56% of the entire bulk of items (Tab.7).

The 60% of these authors cannot be defined bibliometricians, meaning they have used bibliometrics and published articles based on bibliometrics analysis but, looking at their personal profiles and activities, their main institutional activity is completely different. Though some of them continue to publish their researches both on bibliometric issues and on their original field of interest.

Looking at the country of provenience and crossing this data with profiles data, some results are evidenced : whilst in some countries (Italy and The UK, for instance) bibliometric scientist are more likely to publish also in NOT LIS areas, in others (i.e. Germany) the space in NOT LIS area is filled in by scientists not having bibliometrics as their main research area.

We then looked deeply inside the areas of specialization of NOT LIS authors in order to understand if a study path and a research career is, by experimental data, the preferred one leading to work on bibliometrics. Two results triggered some considerations. First of all: most of the authors, to be considered as not fully expert in bibliometrics, are working and publishing researches in the Medical Area. A possible explanation for this observation could be the fact that scholars in the Medical Area are used to produce publications according to the Publish or Perish law and sooner than others have to experiment for themselves the importance of bibliometrics from an evaluation perspective.

The second finding stood in the absence of Natural Sciences in the list of areas of specialization: it is an unexpected result considering that some of most famous bibliometrics experts in the history of this discipline (i.e. E.Garfield and J.Hirsch) have a background in these areas.

Conclusion

The results of data analysis performed by different points of view, revealed a great variety of professional types involved in studying bibliometric issues in general, or in studies concerning use and validity of quantitative tools. We found out that a high percentage of authors (around 30%) belong to areas different from LIS, and their main activity continues to be completely different. This fact may be considered very anomalous in the scientific areas, since it is very unlikely for example for a veterinary to write on botanical issues or vice versa.

These unexpected results can trigger a few reflections about the reasons of these peculiarities. Due to economical and careers issues, all researchers have to face the problem of giving numerical evidence of the impact of their scientific production. For this reason, willing or not, they have to engage themselves in extra efforts in order obtain and produce their personal bibliometric scores.

Some of the NOT-LIS authors, often engage themselves in statistical studies on the literature belonging to their specific areas in order to enlighten trends of studies or immediate impact of new discoveries; but in such cases their own publications are not so numerous. Instead more often than not, professionals with NOT-LIS backgrounds, develop a deep interest in research evaluation and this is also the case, overseas, of Garfield whose first degree is in chemistry or Jorge Hirsch who is a physicist. Though while Garfield dedicated his entire life to evaluation topics, Hirsch, in spite of being the inventor of H-index, the first bibliometric indicator thought as score for the individual scientific production, is still completely dedicated to superconductivity: only four or five articles concern H Index and its variants, on a bulk of over 155 published studies.

Beyond individual initiatives, also many academic groups in Europe are engaged in developing new bibliometric indicators algorithms and platforms for institutions and individuals ranking, but in this case, they are mainly, experts in statistics and computer science.

In the present study, the analysis we performed confirmed our initial idea that as professionals, “librarians are playing a secondary role in the process of evaluating research activities, usually collaborating as auxiliary providers of raw data extracted from pre-selected sources. Given the subjective nature of the decision committees, there is a strong need for unbiased and objective procedures guaranteed by independent professionals”(9).

As a matter of fact, we believe that librarians could guarantee professional support to research evaluation, free from personal interests, and serve as liaison officers between scientists and evaluators, in a synergic effort towards fair evaluation.

Moreover, in this changing scenario, also influenced by a worldwide economical crisis, bibliometric techniques and analysis may also provide a new business area for university and governmental libraries in the role of interdisciplinary and independent institutions, able to centralize research evaluation services.(10)

Tables and figures

Table1

Table2

Table3

Table4

Table5

Table6

Table7

REFERENCES

- Garfield E. Citation Indexes for Science: A New Dimension in Documentation through Association of Ideas. Science. 1955 July;122(3159):108-11.

- Mingers J, Leydesdorff L. A review of theory and practice in scientometrics. Eur J Oper Res. 2015;246(1):1-19.

- Hirsch J E. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005;102(46):16569-16572.

- Egghe L. Theory and practise of the g-index. Scientometrics. 2006;69(1): 131-152.

- Meyers, B. F. Do we need a bibliometrician to know which way the wind is blowing?. J Thorac Cardiov Sur.2016;151(1): 23-24.

- Walters WH, Wilder E I. Worldwide contributors to the literature of library and information science: top authors, 2007–2012. Scientometrics. 2015;103(1):301-327.

- Olmeda-Gómez C, de Moya-Anegón F. Publishing Trends in Library and Information Sciences Across European Countries and Institutions. J Acad Libr. 2016;42(1):27-37.

- Garfield, E. Citation analysis as a tool in journal evaluation.Science. 1972;178(4060): 471-479

- Aguillo, I. Informetrics for librarians: Describing their important role in the evaluation process. El profesional de la información. 201;25(1):5-10

- Ball R, Tunger D. Bibliometric analysis-a new business area for information professionals in libraries? Support for scientific research by perception and trend analysis. Scientometrics. 2006;66(3):561-577.